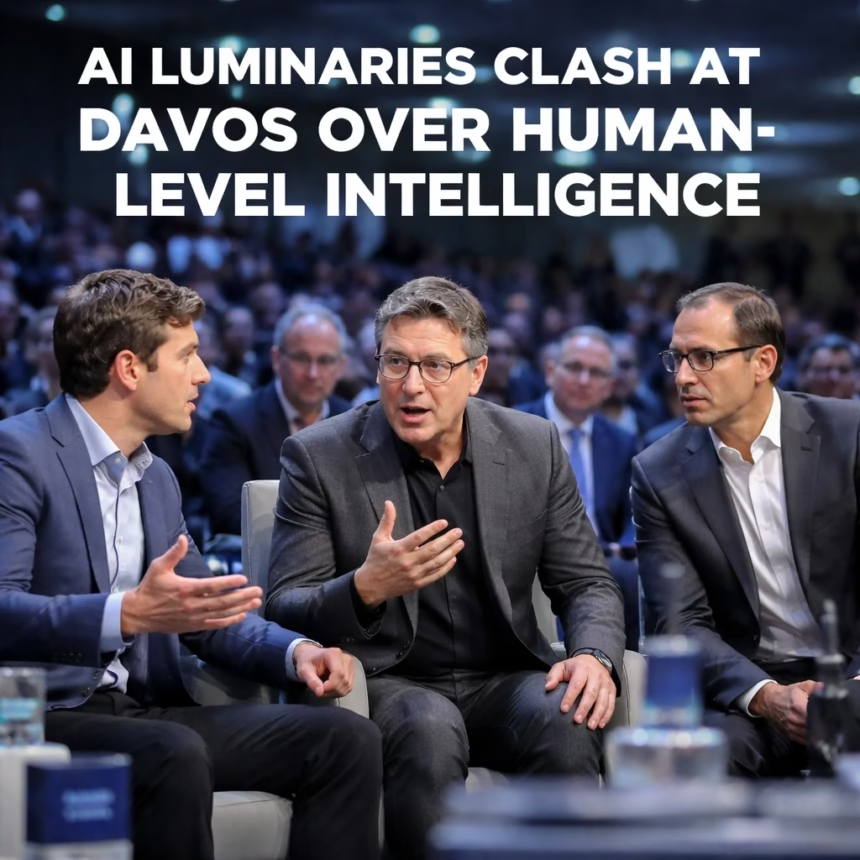

At the recent World Economic Forum in Davos, Switzerland, a sharp divergence of opinion emerged among leading artificial intelligence figures regarding the imminent arrival of human-level AI. The annual gathering saw influential technologists and researchers engage in spirited debate, highlighting a clear schism between those who foresee Artificial General Intelligence (AGI) as a near-term reality and those who view it as a distant prospect requiring fundamental breakthroughs.

Background: The Enduring Quest for Artificial General Intelligence

The concept of machines achieving human-like intelligence has captivated scientists and philosophers for decades. The term "Artificial Intelligence" was coined in 1956 at the Dartmouth Workshop, laying the groundwork for a field that has experienced cycles of fervent optimism and subsequent "AI winters." Early pioneers like Alan Turing envisioned machines capable of mimicking human conversation, a benchmark known as the Turing Test.

Artificial General Intelligence, or AGI, refers to hypothetical AI that can understand, learn, and apply intelligence to any intellectual task that a human being can. Unlike "narrow AI" systems, which excel at specific tasks like chess or image recognition, AGI would possess broad cognitive abilities, including common sense, abstract reasoning, and problem-solving across diverse domains. The pursuit of AGI represents the ultimate ambition for many in the field.

Recent years have witnessed a dramatic resurgence in AI capabilities, largely fueled by advancements in deep learning, neural networks, and the proliferation of vast datasets and computational power. The emergence of large language models (LLMs) like OpenAI's GPT series and others has particularly intensified discussions around AGI. These models demonstrate impressive proficiency in generating human-like text, translating languages, and even writing code, prompting many to question how far away true generalized intelligence might be. This rapid progress set the stage for the contentious discussions at Davos.

Key Developments: The Davos AGI Showdown

The picturesque Swiss Alps became the backdrop for a significant ideological clash among AI's most prominent architects. The debate centered not just on the technical feasibility of AGI, but also on its potential timeline and the very nature of intelligence itself.

The Optimists: AGI on the Horizon

Leading the charge for a more imminent AGI future were figures like Sam Altman, CEO of OpenAI. Altman, whose company's ChatGPT catalyzed much of the current public AI discourse, articulated a belief that AGI could manifest within the next few years, perhaps even by the end of the current decade. His arguments often rest on the observed "scaling laws" of current AI models, where increasing computational power, data, and model size lead to unexpected "emergent properties" and capabilities. Altman suggests that continued scaling, combined with further architectural refinements, will eventually bridge the gap to general intelligence.

Demis Hassabis, CEO of Google DeepMind, also expressed a degree of optimism, albeit with a more measured tone. While acknowledging the significant technical hurdles, Hassabis indicated that breakthroughs leading to AGI could be achieved within a decade or two. His vision often involves the development of novel AI architectures, potentially multimodal systems that integrate various forms of data and sensory input, moving beyond purely text-based models. Hassabis's work at DeepMind, known for conquering complex games like Go, reflects a belief in the power of advanced learning algorithms to achieve super-human performance in increasingly complex domains.

These proponents emphasize the accelerating pace of AI development, the exponential growth in available data, and the continuous innovation in hardware. They argue that the current trajectory, if maintained, makes AGI an almost inevitable outcome in the not-too-distant future.

The Skeptics: Fundamental Gaps Remain

On the opposing side, highly respected voices cautioned against premature declarations of AGI's proximity, highlighting fundamental limitations of current AI paradigms. Yann LeCun, Chief AI Scientist at Meta AI and a Turing Award laureate, was a prominent voice among the skeptics. LeCun strongly asserted that current AI architectures, particularly transformer-based LLMs, are fundamentally insufficient for achieving true AGI. He criticized these models for their lack of common sense reasoning, their inability to build robust "world models" (an internal understanding of how the world works), and their reliance on vast amounts of data without true comprehension.

LeCun argued that AGI is likely decades away, requiring entirely new foundational breakthroughs rather than merely scaling up existing technologies. He emphasized the need for AI systems that can learn more efficiently, perhaps akin to how human infants learn about the physical world through interaction and observation, rather than solely from text corpora. He pointed to the inability of current LLMs to perform complex planning, robust reasoning, or truly understand causality as evidence of their deep conceptual limitations.

Andrew Ng, co-founder of Google Brain and DeepLearning.AI, and a professor at Stanford, also adopted a more pragmatic stance. While acknowledging the long-term goal of AGI, Ng emphasized the immediate and profound impact of "narrow AI" in solving specific, real-world problems. He suggested that focusing too heavily on the distant prospect of AGI distracts from the current opportunities to deploy specialized AI systems that can revolutionize industries and improve lives today. Ng's perspective often prioritizes practical applications and tangible benefits over speculative future capabilities, advocating for a more incremental and problem-focused approach to AI development.

These skeptics underscore that true intelligence involves more than pattern matching or statistical correlation; it requires genuine understanding, adaptability, and the ability to operate effectively in unknown environments – capabilities they believe current AI systems demonstrably lack.

Impact: Reshaping Investment, Policy, and Research

The divergent views expressed at Davos carry significant implications across various sectors. The perceived timeline for AGI directly influences strategic decisions and resource allocation.

Investment and Funding

Optimistic projections about AGI's arrival fuel massive investments in AI startups and research initiatives. Venture capitalists and corporate R&D departments are pouring billions into companies promising to accelerate AI capabilities, often betting on the "scaling laws" touted by proponents. Conversely, a more skeptical outlook might redirect funding towards specific, narrow AI applications with clearer immediate returns, or towards fundamental research into entirely new AI paradigms, rather than simply expanding existing ones.

Policy and Regulation

Governments worldwide are grappling with the complex ethical, societal, and economic implications of advanced AI. The urgency of developing robust regulatory frameworks, addressing potential job displacement, and establishing international governance for AI safety hinges significantly on how quickly AGI is expected to materialize. If AGI is believed to be imminent, policymakers might push for rapid, pre-emptive legislation regarding AI alignment, control, and existential risks. If it's seen as a distant future, the focus might remain on managing the ethical challenges of current AI applications.

Research Direction

The debate profoundly shapes the direction of academic and industrial AI research. Should resources be primarily allocated to refining and scaling current deep learning architectures, hoping for emergent intelligence? Or should the emphasis shift towards more foundational research into new cognitive architectures, common sense reasoning, symbolic AI, or biologically inspired models that might offer a different path to general intelligence? The clash at Davos highlights this fundamental tension within the research community.

Societal Readiness

Public perception and societal readiness for a world with human-level or super-human AI are also impacted. Fear and excitement both stem from these predictions. Preparing for the ethical dilemmas, economic shifts, and potential societal transformations that AGI could bring requires foresight and planning, which are complicated by widely differing timelines.

What Next: The Road Ahead for AI

The Davos debate underscores that the journey toward AGI, if indeed it is achievable, remains a contested and complex one. Several key areas will likely define the next phase of AI development:

Beyond Transformers

Expect continued research into novel AI architectures that move beyond the limitations of current transformer models, particularly in areas like common sense reasoning, causal inference, and world modeling. This could involve hybrid approaches combining neural networks with symbolic reasoning, or entirely new paradigms.

Multimodal AI

A strong focus will remain on developing AI systems that can process and integrate information from multiple modalities – text, images, video, audio, and even physical interaction. This is seen by many as crucial for building a more comprehensive understanding of the world, akin to human cognition.

Robust Evaluation Metrics

As AI capabilities advance, the need for more sophisticated and comprehensive evaluation metrics for "intelligence" will grow. Current benchmarks often fall short of truly assessing generalized cognitive abilities, making it difficult to objectively measure progress towards AGI.

Ethical Frameworks and Governance

Regardless of the AGI timeline, discussions around AI ethics, safety, and governance will intensify. International collaborations, industry standards, and regulatory bodies will continue to evolve to address the societal impacts of increasingly powerful AI systems.

The clash at Davos serves as a powerful reminder that while AI has made breathtaking strides, the path to true human-level intelligence remains a subject of intense scientific and philosophical debate. The coming years will undoubtedly reveal whether the optimists or the skeptics were closer to the mark.